Unveiling the Black Box:

Making AI Understandable and Trustworthy

In a world increasingly driven by artificial intelligence (AI), we find ourselves entrusting complex decisions to machines. These AI algorithms power the systems that influence our lives in profound ways, from suggesting what to watch, to choosing what we are going to wear tonight. Just think, when have you wrote the whole search query instead of clicking on auto complete result... However, this growing dependence on AI brings with it a challenge: the 'Black Box' phenomenon! AI models, often intricate and convoluted, make decisions that are difficult for humans to decipher. This is where the concept of Explainable AI (XAI) steps in, shedding light on the opaque realm of AI and making it translucent or comprehensible for all. There is also a company named XAI that was created "To understand the true nature of universe", however that is a topic for future article.

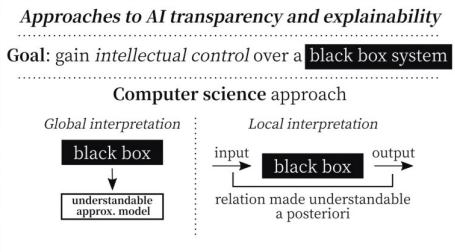

AI models, particularly deep learning models, are hailed for their exceptional accuracy and predictive power. Yet, as they become more powerful, they also become less transparent. Deep neural networks, for instance, are composed of numerous interconnected layers, making it almost impossible to trace how certain inputs lead to the exact outputs. It's similar to dealing with a black box, where we see what goes in and what comes out, but the inner workings remain elusive.

Explainable AI, a flourishing field that strives to bridge this gap between complexity and understanding. It advocates for developing AI models that not only provide results but also reveal their reasoning behind those results in a human-understandable manner. Essentially, it aims to make AI more transparent and interpretable, enabling us to trust and comprehend the technology that governs our lives.

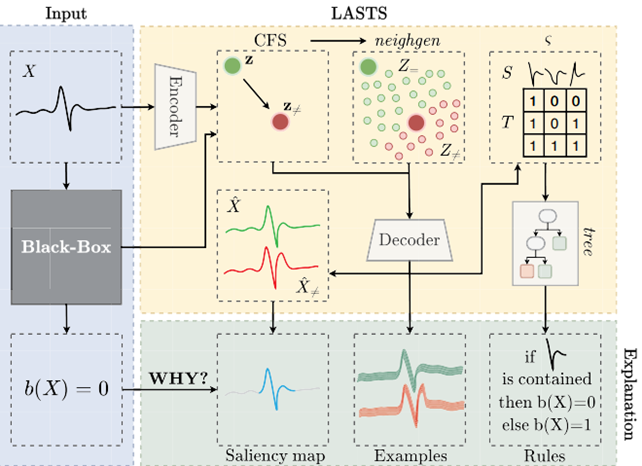

Researchers propose the LASTS (Local Agnostic Subsequence-based Time Series Explainer) framework to address the need for explainability in time series classification, providing interpretable explanations for any black-box predictor. By unveiling the logic behind the decisions made by these classifiers, LASTS enhances transparency and facilitates a deeper understanding of the classification process. It can be better understood by individuals within the respective fields of study (AIML in this case) using the following figure.

1. Feature Importance Analysis:

Understanding which features or inputs are most influential in a model's decision-making process helps in connecting outcomes to specific data points.

2. Local Interpretable Model-Agnostic Explanations (LIME):

LIME is a method aimed at understanding the predictions of complex machine learning models. It works by creating a simpler, interpretable model around a specific data point, providing insight into how the black box model arrived at its prediction for that instance. This local approximation helps users grasp the model's decision-making process in a more understandable manner.

3. Decision Trees:

These structures provide a straightforward representation of decision-making, making them easily understandable models.

4. Layer-wise Relevance Propagation (LRP):

LRP assigns relevance scores to each component of the neural network, helping in understanding the importance of each element in the decision process. By seeing the results we can get an idea as to which component among the thousands influenced our output the most.

Explainable AI finds application in numerous domains, ensuring AI models are more than just prediction engines:

AI is the future and it will (probably has) become an essential part of life but as it continues to integrate into our lives, the need for understanding and trust becomes paramount. Explainable AI offers a promising path to make this a reality. By lifting the veil of complexity, we can build AI systems that not only excel in performance but also ensure that humanity remains at the heart of technological advancements. Our baseless fear, that AI will usurp humanity will meet dead end. The journey towards a more transparent AI future has begun, and it holds the key to unlocking the true potential of artificial intelligence for the benefit of all.

Source: www.darpa.mil

https://en.m.wikipedia.org/wiki/Explainable_artificial_intelligence

Mihály, Héder (2023). "Explainable AI: A Brief History of the Concept" (PDF). ERCIM News (134): 9–10.